Keir is an AEC Area Knowledgeable working on the intersection of structure follow, sustainable growth and software program design. With over 15 years in follow, he has crafted high-quality tasks throughout numerous sectors, together with schooling, well being, housing, and workplaces. He helps Architects, Shoppers and Startups thrive in an ever-changing business. Join on LinkedIn.

A brand new week, a brand new approach for Generative AI to blow our minds.

Photographs generated from textual content prompts have now crammed my information feed; they’ve swiftly ascended the viral ladder, triggered heated debates and gained ‘meme standing.’ When these arresting visuals began showing, it felt like every novel experimentation demanded our shut consideration: “What has this inexplicable new software completed now?!”

But fashionable consideration spans are more and more brief, and daring imagery can shortly develop into ubiquitous. Our sense of marvel is well changed by boredom and ambivalence.

Extremely, the event of Generative AI truly seems to be evolving sooner than our agitated fashionable consideration spans. Simply as I used to be starting to develop into nonplussed by the newest hybridization of Batman X The Simpsons, I found sketch-to-render.

Most individuals are acquainted with fashions that use easy textual content prompting, the place you describe every thing a few composition utilizing phrases solely. A lot will be achieved with these instruments, however with regards to actual composition and configuration, you’re working on the mannequin’s behest. Nevertheless, fewer architects are conscious you could now mix a picture with a textual content immediate to additional your inventive management.

Whereas these are enormously promising developments, it has been exhausting to grasp precisely how an architect would possibly have the ability to use these instruments. How can we use them to reinforce the design and visualization processes we’re already doing? In structure, we work within the gritty actuality, not the artificial creativeness of AI. Planning and building is a messy enterprise that requires precision options.

But, sketch-to-render is a distinct sort of strategy and takes consumer management to the subsequent stage once more, using an extra step within the technology function referred to as Management Nets, which permit for a lot larger management over how a picture is constructed and the place the skilled mannequin will go to work on a composition. Consider Management Nets as a framework or bounding field inside which the AI will go to work — it places you within the driving seat of the mannequin’s explorations.

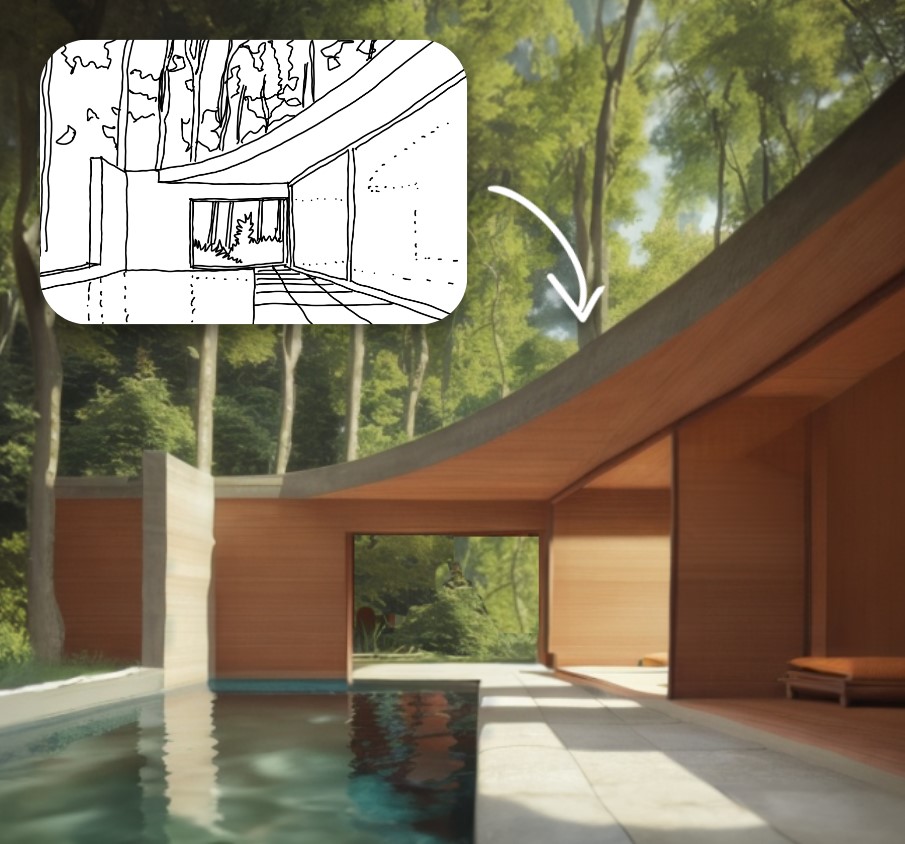

Sketch by writer (of NWLND’s “Refuge” undertaking), render produced in Prome AI

Sketch-to-Render

It is a 20-minute course of and the thought right here is to go straight from primitive line work to vivid render.

Midjourney can produce extremely top quality and vivid imagery, however presents restricted management over the exacting composition of the subject material. For fields like structure, the power to repair the areas inside a picture round which the mannequin will iterate is totally important for precise software adoption and use.

There are actually numerous strategies to mix generative picture instruments with ‘mounted’ picture topics and composition to present extra exacting management over a single viewpoint and to then iterate concepts on prime.

Listed below are some good rising strategies which can be price experimentation:

- Management Internet: A Secure Diffusion mannequin that creates an summary segmentation utilizing a preprocessor after which combines this with a textual content immediate. The set up is difficult for normal customers and the software program wants a strong pc. In case you can’t run this domestically resulting from {hardware}, now you can do it on the cloud, the place the Secure Diffusion with ControlNet is now being hosted by numerous suppliers.

- PromeAI: The simplest interface that I’ve tried for sketches, full with preset filters and types. Its principally free baseline options are highly effective and value a play. The workflow is straightforward simply login, add a sketch or hidden line view, add textual content description prompts and off you go.

- Veras: This works immediately throughout the viewport of on a regular basis software program interfaces (SketchUp/Rhino/Revit). It’s easy and simple to make use of and is frictionless as a result of it’s a 3D CAD plugin. The extra element and floor supplies you may add, the higher it’s going to do at recognizing elements.

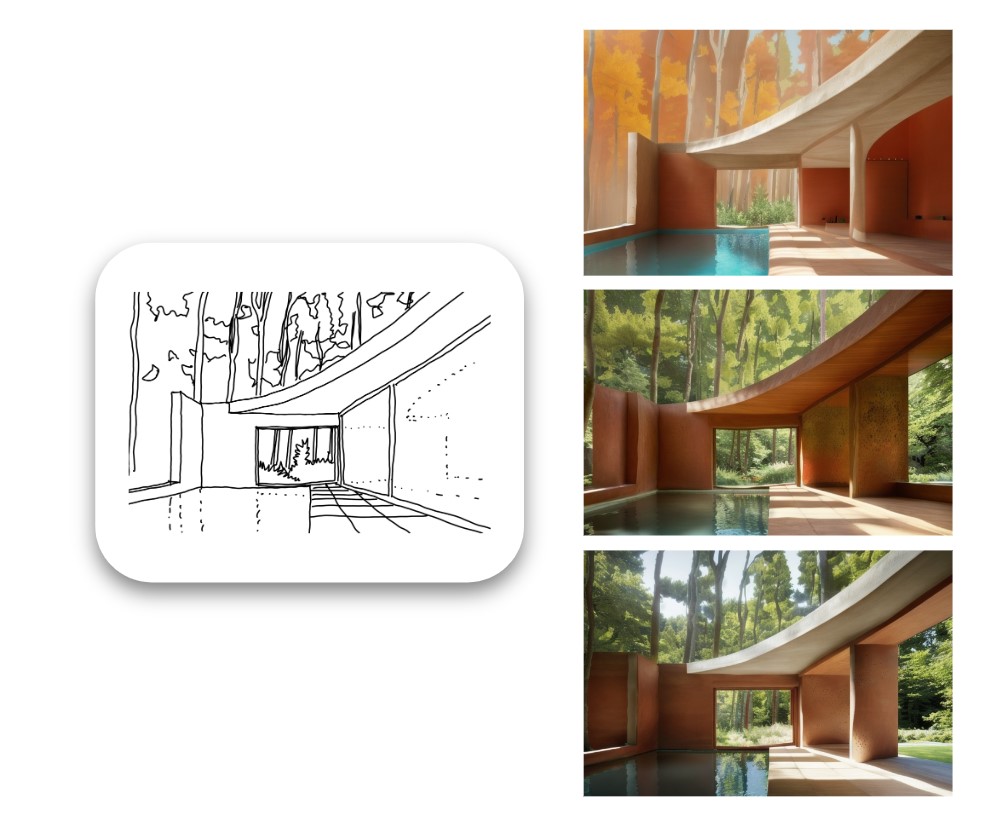

Instance of assorted rendered outputs produced from the unique sketch

Sketch-to-Render-in-Context

It is a extra complicated course of and takes about half-hour when you perceive easy methods to do it.

The direct sketch-to-render instruments are nice to make use of, however having experimented with them intimately, I felt they have been finest suited to inside design work solely. With regards to exterior envelope and massing, we all the time want to position our concepts in context and render with acceptable scale, visualizing the buildings and landscapes inside which they sit.

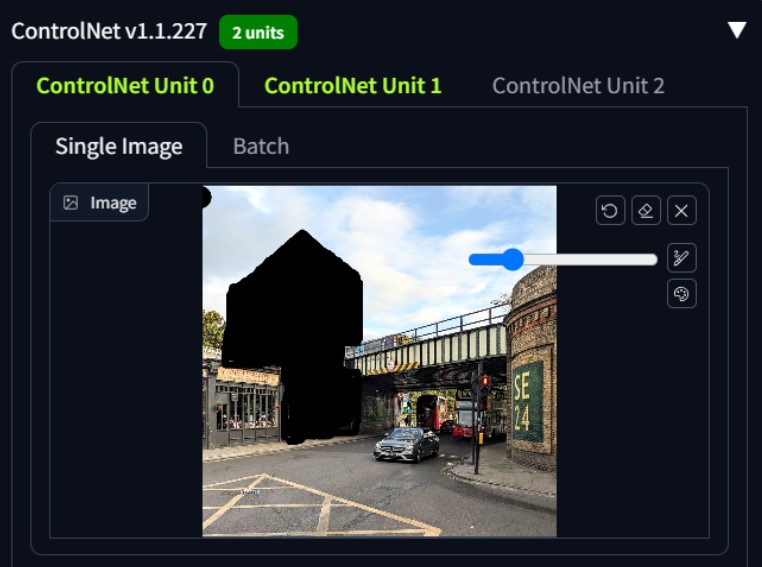

Then, I found the thought of utilizing two management nets collectively: one for a course of referred to as “in-painting” and the second for the sketch proposal. There may be fairly a little bit of trial and error to get the workflow proper, nevertheless it’s made attainable by operating Secure Diffusion with ControlNet mannequin in your native machine and is repeatable for any photograph and sketch combo (offered you may draw).

{Photograph} taken by writer of an imaginary growth web site whereas on a cycle trip dwelling

The aforementioned easy “sketch-to-render” course of works with one ControlNet energetic. Nevertheless now you can use Secure Diffusion with a second ControlNet on the identical time which can be utilized for a course of referred to as “in-painting” and this lets you inform the mannequin precisely which components of a supply picture you wish to experiment with and which you wish to go away precisely as they’re.

What I like about this software of Generative AI is that it depends solely in your path and discretion as a designer and what you do with the pen. It takes out the “middle-man” of painstaking digital modeling of an thought and goes straight to vivid imagery. That is rendering with out the hours of boring 3D modeling.

In a world the place you may get straight to a high-quality render with only a web site photograph, a sketch and a capability to explain your thought within the type of an efficient immediate, you instantly bypass the necessity to construct detailed 3D fashions of preliminary idea concepts.

Design sketch by writer, drawn shortly immediately on prime of unique {photograph}.

Arguably, most main practices working with builders need to run tasks with a excessive diploma of waste. Choices are examined, digitally modeled in 3D, rendered, photoshopped and maybe mocked up shortly in foam or card mannequin for a consumer to evaluate. Then we reply to feedback, requests for adjustments, new constraints, new data and a steady strategy of change happens.

All through this design course of, every rendition have to be exhaustively conceived, drawn and modeled earlier than it may be vividly represented in context; this implies a lot of the earlier work is discarded or inevitably thrown away virtually instantly. Fast iteration tooling might massively scale back the waste and grunt work related to the method we name “optioneering.” This time period isn’t used affectionately in follow as a result of it will probably really feel so non-linear, however sadly some type of choice testing is all the time required to find a design and every choice requires a number of time and vitality.

Screenshot produced by writer displaying strategy of in-painting utilizing Secure Diffusion with 2no ControlNets – the black space tells the mannequin which a part of the picture to experiment upon

In a race to provide highly effective idea imagery for a brand new feasibility examine or competitors, somebody who can draw their concepts properly goes to beat 3D modeling in turnaround time and pace of iterations utilizing a software like this .

Quickly, purchasers shall be getting rendered concepts in a matter of days from a fee, not weeks. Their expectations about what is feasible in a given timeframe are going to vary — and shortly.

There stays a great deal of area for enchancment, however the basis is there for a really completely different strategy to design and visualization that could possibly be actually empowering for architects (and purchasers too).

Ultimate Picture produced by writer utilizing Secure Diffusion with two ControlNets for an imaginary undertaking in Herne Hill, London. The picture was produced in lower than an hour together with sketching time.

My first try is a bit tough however demonstrates the big potential right here; think about how highly effective this shall be for early stage feasibility work. I positively don’t love the end result, nevertheless it’s some model of what I used to be considering within the sketch. I’d nonetheless be completely satisfied to current this picture to a consumer as an early examine at feasibility stage to present them a extra vivid sense of a undertaking’s massing and scale alongside a set of 2D drawings earlier than growing the popular choice in high quality element myself.

Within the instance proven, I’d estimate the mannequin achieved about 50% of my line intentions and about 20% of my materials intent on the façades. Nevertheless, the attitude, massing, lighting, context placement, reflections and sense of scale are all bang on — and all that is completed with a basic objective, open-source mannequin.

As this know-how continues to enhance, specialised structure fashions shall be skilled on information units that focus particularly on façade and architectural composition. Totally different architectural types and materiality choices shall be made attainable and they are going to be much better at understanding façade componentry reminiscent of flooring zones, balustrades, home windows, curtain walling and columns. The fashions might want to be taught “archispeak” which can now be expressed utilizing textual content immediate inputs and require architects to say what they really imply in easy language.

As these fashions enhance we will discern discrete components throughout the design idea, determine them as an architectural constructing element after which refine them immediately with prompts as we work. We can apply completely different prompts to completely different components of the picture, add folks, change the lighting and temper — designing time and again in a stay render surroundings — with out modeling something, all probably pushed from a sketch thought.

There shall be many extra experiments to come back… and when Midjourney can do ControlNets too, it’s going to most likely really feel like “sport over” for a lot of the normal 3D mannequin and rendering that we do at present.

Keir is an AEC Area Knowledgeable working on the intersection of structure follow, sustainable growth and software program design. Join on LinkedIn.

With due to:

Ismail Seleit who was the primary particular person I noticed display this concept.

@design.enter who put out an excellent video that helped to explain every step of the method.

Hamza Shaikh for pointing me at ControlNet within the first place once I received annoyed with Midjourney.

Architizer’s new image-heavy day by day publication, The Plug, is simple on the eyes, giving readers a fast jolt of inspiration to supercharge their days. Plug in to the newest design discussions by subscribing.