Researchers from the GrapheneX-UTS Human-centric Synthetic Intelligence Centre have developed a conveyable, non-invasive system that may decode silent ideas and switch them into textual content.

In a world-first, researchers from the GrapheneX-UTS Human-centric Synthetic Intelligence Centre on the College of Know-how Sydney (UTS) have developed a conveyable, non-invasive system that may decode silent ideas and switch them into textual content.

The know-how may help communication for people who find themselves unable to talk attributable to sickness or harm, together with stroke or paralysis. It may additionally allow seamless communication between people and machines, such because the operation of a bionic arm or robotic.

The research has been chosen because the highlight paper on the NeurIPS convention, a top-tier annual assembly that showcases world-leading analysis on synthetic intelligence and machine studying, to be held in New Orleans on 12 December 2023.

The analysis was led by Distinguished Professor CT Lin, Director of the GrapheneX-UTS HAI Centre, along with first creator Yiqun Duan and fellow PhD candidate Jinzhou Zhou from the UTS College of Engineering and IT.

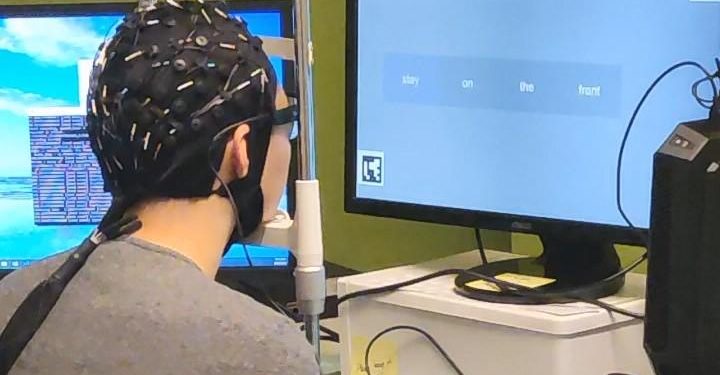

Within the research members silently learn passages of textual content whereas carrying a cap that recorded electrical mind exercise by way of their scalp utilizing an electroencephalogram (EEG). An illustration of the know-how could be seen on this video.

The EEG wave is segmented into distinct models that seize particular traits and patterns from the human mind. That is achieved by an AI mannequin known as DeWave developed by the researchers. DeWave interprets EEG indicators into phrases and sentences by studying from massive portions of EEG knowledge.

“This analysis represents a pioneering effort in translating uncooked EEG waves immediately into language, marking a big breakthrough within the area,” stated Distinguished Professor Lin.

“It’s the first to include discrete encoding strategies within the brain-to-text translation course of, introducing an progressive strategy to neural decoding. The mixing with massive language fashions can also be opening new frontiers in neuroscience and AI,” he stated.

Earlier know-how to translate mind indicators to language has both required surgical procedure to implant electrodes within the mind, comparable to Elon Musk’s Neuralink, or scanning in an MRI machine, which is massive, costly, and tough to make use of in day by day life.

These strategies additionally battle to remodel mind indicators into phrase stage segments with out further aids comparable to eye-tracking, which limit the sensible software of those techniques. The brand new know-how is in a position for use both with or with out eye-tracking.

The UTS analysis was carried out with 29 members. This implies it’s prone to be extra strong and adaptable than earlier decoding know-how that has solely been examined on one or two people, as a result of EEG waves differ between people.

Using EEG indicators acquired by way of a cap, fairly than from electrodes implanted within the mind, implies that the sign is noisier. By way of EEG translation nevertheless, the research reported state-of the artwork efficiency, surpassing earlier benchmarks.

“The mannequin is more proficient at matching verbs than nouns. Nevertheless, in relation to nouns, we noticed a bent in direction of synonymous pairs fairly than exact translations, comparable to ‘the person’ as a substitute of ‘the creator’,” stated Duan.

“We predict it’s because when the mind processes these phrases, semantically related phrases would possibly produce related mind wave patterns. Regardless of the challenges, our mannequin yields significant outcomes, aligning key phrases and forming related sentence buildings,” he stated.

The interpretation accuracy rating is at present round 40% on BLEU-1. The BLEU rating is a quantity between zero and one which measures the similarity of the machine-translated textual content to a set of high-quality reference translations. The researchers hope to see this enhance to a stage that’s similar to conventional language translation or speech recognition applications, which is nearer to 90%.

The analysis follows on from earlier brain-computer interface know-how developed by UTS in affiliation with the Australian Defence Drive that makes use of brainwaves to command a quadruped robotic, which is demonstrated on this ADF video.

Unique Article: Transportable, non-invasive, mind-reading AI turns ideas into textual content

Extra from: College of Know-how Sydney